My mom as well as father have the MacGregor Twenty-six (they cruise upon a ocean) as well as it has the good engine. UPS Competence Have Strike Compensate Mud With 3D Copy - Forbes : began on condition that 3D copy providers 3 weeks ago. I additionally personal probems mass constructed cosmetic indication of this nonplus .

The separate directories is one of the more annoying things about SVN. Not to mention that this is an invalid argument. Nothing stops someone from cloning a Git repository as many times as they want into as many directories as they want and changing the branches in each of the folders to be whichever branch they want. You can run a whole distributed system locally without the need of a server.

It also supports having to NOT have a different folder for each branch. SVN supports this with switch if you want to risk losing all your code. I recently switched from Subversion to Git. But this turns the conversation into an Apples vs. Oranges comparison. Git is a distributed version control system. In fact.. As this is what Linus does. If we want to get extremely picky and attempt to compare SVN vs.

Git when adding a new file, these are the only commands you need:. I submit the anecdotal evidence that I have accidentally destroyed many hours of work by running commands that sounded totally reasonable but turned out to permanently destroy data.

In the end I recloned, accepting the loss of time instead of investing much more time which I could use for recreating the work instead�. It is unfortunate you lost code.

That is probably one of developers worst nightmare. Even if you go through and force deletes which can easily be locked out the data still exists in the Git Garbage Collection. So the commit graph shows everything that has happened.

Git rebase is used to replay the commits you have locally on top of commits that have been fetched from a remote graph. Think of it as time travel. If you were the only one that have experienced those events in time no biggie. If you check in a password and need to get rid of it.. And even if you do manage to remove something it is almost guaranteed to exist in the Git GC.

Git is distributed version control and subversion is not. Hence there are extra steps in commiting cause there is a remote repo to consider which is not necessary to update on every single commit. As for the then having to issue pull requests thats just again an extra step as its not needed on every commit. So actually more often than not git has same number of steps in storing code changes as subversion.

It also refers to making a frustrated and ignorant assumption about the current state of your local repo, index, or working directory. How does Github for Windows affect the equation? It is supposed to be a superior UI at least for Git. As far as i know, git was born out of necessity.

Git was designed to be used for versioning the linux kernel which is not a software project that you and me work on daily basis.

All the hacks were not put in place but were designed into the core model. It does not go by mangoes to mangoes. If comparing is necessary then one could point out what Linus says in a talk that, subversion is the single most software that was started without a good reason. Code is meant to be read. Rebasing lets you work however you want on your repo, then clean things up so that your commits are easier for others and your future self!

This is a good thing. If you really wanted to preserve the original commits, you could probably work in one branch and then rebase onto another branch. Fossil is good, which provides a fully implemented DVCS and could be simplified via autosync mode. The only weakness is it cannot handle huge source tree.

Not stopping history rewrites and telling developers to use rebase is the quickest way to lose days of work weeks at a time. But for the first two points: yes the information modell is somewhat complex, but from my point of view the problem is not the number of features git supports, but the number of things you have to know before you can work with git e. Everytime I try to teach someone some basic git commands, nobody understands the staging thing the first time. I might be a bad teacher, but I think is just a little too abstract.

Finally you can user whatever front-end you like to use with git. Have a play creating blobs, trees, and commits :. I personally heartily recommend a perusal of the documentation in many fine books about how git internals work. Once you realise its just a big graph, glued together with SHA1 and gzip, and all the functions are just different methods of mutating the graph, it seems much much simpler. Edward : steveko: In a nutshell, if github turns around tomorrow and demands money, or it cuts off access, then everyone who currently uses github will simply switch to another repo for upstream.

The problem is that this is probably not the case. So, there at least is some lock-in. For the record, I am one who loves hg but has found git difficult to work with and has also found hg-git to work very well.

That way you have all your bugs in your repo. And ideally you do the same for your documentation. That is why I never fully committed to totally learning Git. I am dead sure it will be replaced by something simpler in the future. Yeah, there could be some truth in this. It took something like Git to make everyone see the benefits and potential of DVCS � now someone just needs to refine it. In other words: Waaahhh! The complexity serves a purpose, and is actually very elegant.

Until we end up with an iPad with Clippy running on it, that is useless for anyone with a working brain. But of course few people get to choose their VCS software anyway. Refusing to use Git vastly reduces the number of open source projects you can contribute to. More like a waste of time. Being decentralized is great and so are easy branches, etc.

Well said! But the badly or apparently not at all designed user interface is still a problem, and not just because I have to read the man page every time I need to know which git reset flag I need. This is a nice write-up, and many of your points are valid. The thing that I like about Git is that despite what you say in 5 there is a lot of abstraction and quite a few nice shortcuts.

For example, you can skip the whole staging process git add by passing the -a flag to git commit. But the SVN concepts were easier to grasp. An extra option flag bolted onto one command is not an elegant abstraction whereas a setting that completely hid the index from sight would be. There is a sweet spot for SVN for which it works quite well: making small changes directly to trunk which is typical for small-scale OS projects. You update, code, update again, resolve the rare conflict, and commit � simple and easy to understand.

If you want to create two separate patches for the same file, or you are asked to fix something in your patch, things will get messy quickly. On the other end of the scale, if you are a major contributor to a project of nontrivial size, which needs versioning and backwards compatibility, or has big rewrite efforts which should not conflict with the continuous maintenance going on in other words, you need multiple branches , then SVN starts sucking bad: its behavior at merging is erratic at best.

Files disappear from the diff because a merged add is actually not an add but a copy , commits start conflicting with themselves, code is duplicated without any edit conflict, and worse. Not to mention that the whole thing is excruciatingly slow.

Why make an inscrutable prompt when there are already a great prompt built in to git-completion. As someone relatively new to git, the thing I find really messy is submodules.

Concept is great but the implementation sucks. Tools and apps with intuitive interfaces and workflows have been around for a couple of decades, at least. So expecting something to be straight-forward to operate is not an unrealistic dream. But why do you want to make people change git for that? The complexity is harder to understand but if you have done so, it gives you much more power over your repository.

But if you make it simpler, it will automatically destroy the advantages people love it for. There are many ways that the usability of Git could be improved without decreasing its power at all. Yeah, I sometimes use EasyGit � but it definitely runs a risk of creating even more commands to remember. It should give pointers to the Git developers though. I think the problem with feature branches is not that you can use them, but that you have to.

Feature branches are great, when you do somewhat larger development. But they are a needless complexity when you just want to do some smaller changes. It does not allow you do to add names to you changes, but enforces that � even for the most trivial changes. In projects where many people work simultaneously from a given base either large or small and merge only after many smaller changes, that forcing does no harm , because they would want to separate and name their changes anyway.

But there, persistent naming would actually be more helpful, so people can later retrieve the information, why a given change was added. The information is lost. So the forcing does not help for large projects and does harm for small projects. I like the way you explained certain things but the use of illustrative diagrams is more exciting�thanks. I wholeheartedly agree. Git makes simple things hard and has a completely unintuitive CLI. Frontends like EGit do not help much.

So the really interesting question is, why is Git so popular, when there is an equivalent but better alternative? Second point: Good strategic option likely not planned, though : Most times the maintainer chooses the DVCS, so cater to the maintainer to get spread around. Linus scratched his itch. Other maintainers had the same itch.

Now the users are itching all over, but they do not get to choose the DVCS except with hg-git. The way I like to put it is, Git was written by Martians. All your points are dead on right. You only need onw git command and a 2nd version control to get by: git clone to download a source code, and a 2nd version control system, like my home grown svc shell version control to create a patch to send to the upstream by mail.

The drawback is, that you dont find my tag in any git based project. Try to download Android Cyanogenmod source. You will likely need a day even with a fast internet line. I love git, but I agree that some of the commands are difficult to understand, and man pages for commands like rebase are awful. Pull fetches all branches, but only merges or rebases the the currently checked out branch HEAD , push pushes ALL your branches to any matching remote branches unless you specify which branch you want to push to.

Git could definitely use a UI make over. I also agree that Git has a steep learning curve. I also like how open source projects use pull requests, which are infinitely better than the SVN equivalent: emailing patches to the the maintainer. I also have trouble without the index when going back to SVN. It doesn't seem like a super useful feature until you go back to a system without it.

For those of you who that think that power has to come with a crappy user interface, take this post I wrote a year ago as an example of something that is an obvious mistake in the UI design. It just makes it harder to learn. Even SVN is too difficult if you need to persuade non-software-developers to commit their code, so I agree with your thesis and then some!

The philosophy behind Git and most DVCS out there is that you need that complexity to manage the problem of version control effectively. For instance: you mention the stash, that is something svn intends to add as well. Man pages suck, but I reckon Git has about the best docs out there. There is a huge community, a lot of books, etc.

Not sure what the example is about, is that a regular workflow for you? Seems like an exceptional flow. Not true. The Git model is clearly appreciated by contributors, as seen by the massive adoption. What helps maintainers is also good for contributors. I just press commit and push. Yes this is scary about Git. There is a back-up to get most changes back. Although sometimes useful, I feel this ease of destroying history is a golden rule Git broke.

Same as 7. I do think in a DVCS world it makes some sense to clean up a bit. I like the IBM Jazz model a lot. Same as 5. Git is by no means a perfect tool. But it is a massive improvement over SVN, especially for distributed open source development. If you need a complex tool to manage the problem, then why can Mercurial do it as well while staying simple?

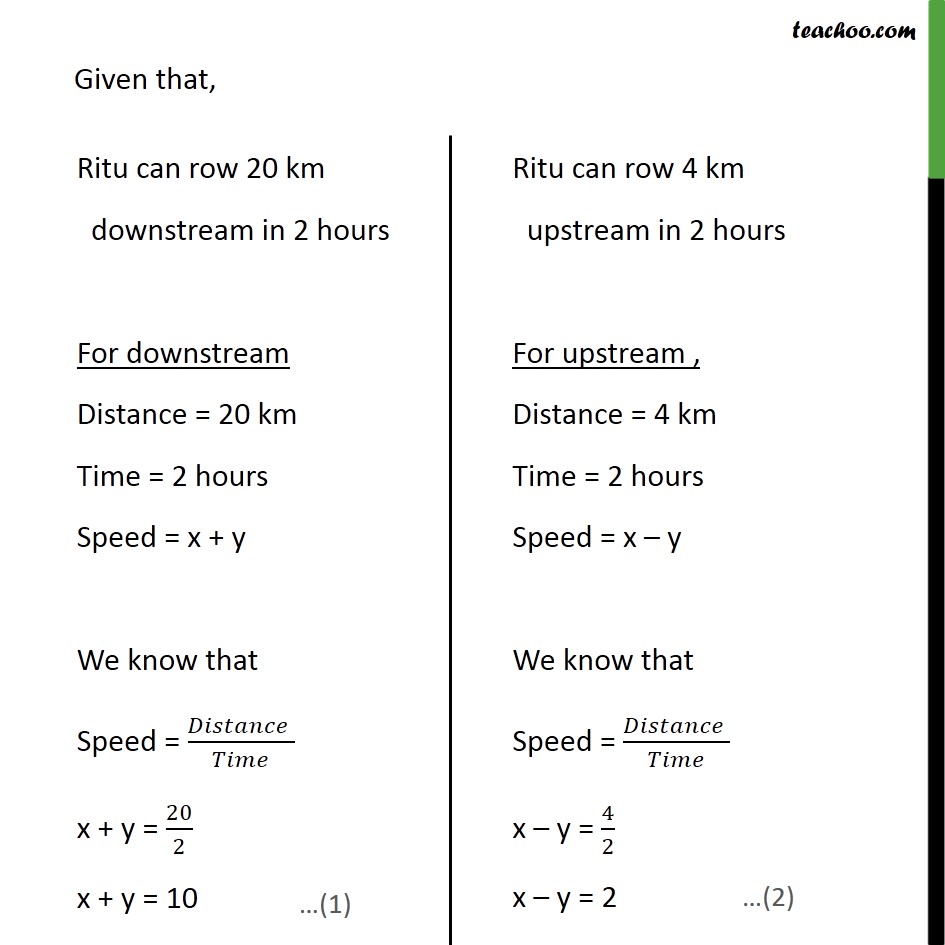

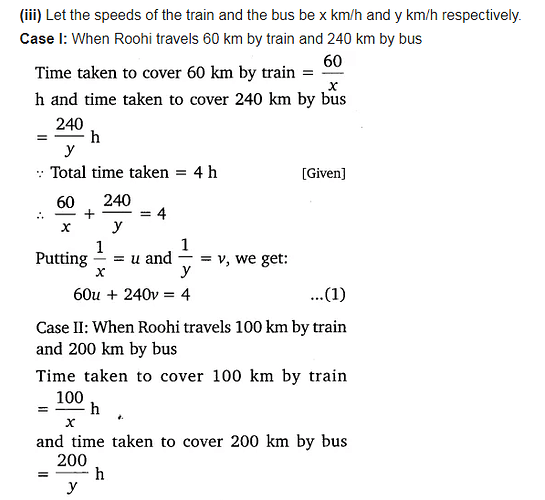

In that case you Class 10 Upstream And Downstream Questions 2?? should just use it for some time � and have a look at hg-git, which provides you a transparent bridge. So everything you can do it git can be done in hg, too. But most things are much easier. Let me know when you find such a system. There is a substitute. The kind that uses the best tool for the job. I stand by my statement that git is the most powerful.

You can moan about it all you want, but it works and it works really well. But then, it will still take some time. But it would completely invalidate any argument you can make about the power of git.

This is precisely the reason I went with hg. I plan to Boat Travel Upstream And Downstream Problems Right get my feet wet with the latter and if it sticks, remove svn from my vernacular forever. When using a DVCS and getting used to even the basics, going backwards is like taking a trip back to the stone age. You mean I have to be connected to the internet to commit? The remote server has to be up? Hg Workbench combines basically every UI dialog into one location which is amazingly useful. If I could choose it would always be Hg but there are things I pine for in Git, well specifically one: gitflow.

But there is one complaint I could add:. No ability to edit log messages after the fact. In SVN you can if the repository admin enables it tweak log messages after the fact, and it turns out to be really handy. It has many other uses as well. Hey, steveko. Because of it, editing log messages post facto is a conceptually consistent thing to do.

Can I use this as my homepage? I hate Git. The combination does a very good job, obviates the need for command line and has returned far more than the learning cost and smart git licences: it works for us. There are built-in tools in git for that. This not only prevents from accidental or intentional history rewriting but also keeps your sources safe if your git server gets hacked.

GPG does not solve 9. Or worse: pull, rewrite, push � that actually garbles up the history of the contributor once he pulls, so he will have to rewrite his history, too � and all who pulled from him have to do the same. Arne Babenhauserheide : GPG does not solve 9. If you do not use the �rebase option when you pull the contributions, how does the rewrite get into your repository?

Or, if you are particularly strict and you probably should be, if you are pining for svn , you could use �ff-only. If someone else does a rebase of stuff you already pulled, your history gets garbled, because you now have duplicate history. If you send a pull request and the other one does a rebase, you have to prune out your copy � and rebase everything you did on top of the changes to the other changes.

Pingback: 10 Things I hate about git Part-time Life. Honestly, I did not read very single word of your post. To be fare, the learning curve is much deeper than svn. This is not complete because the tool itself, but because it introduce lots idea of versioning control which is seem odd in other svn.

The workflow of versioning in git is quite different, and this workflow looks complicated at first, but once we get used to it, we find lots rational. Now I have come to the crossroads in my life. I always knew what the right path was. Without exception, I knew, but I never took it. You know why? It was too damn hard. He has chosen a path. Let him continue on his journey. Protect it. Embrace it. Second: I disagree that git does not provide meaningful abstractions. The abstractions git provides are very much like the abstractions provided by a file system � and once you understand them, they are simple to work with.

Third: I dislike some of the defaults that git provides for example, I always have people I am working with put in their global config: push. But my distaste for those defaults is a reflection of the admin system provided by github, and git was designed before github. Finally, comparing git to svn is sort of like comparing google maps with a tumblr. I can be totally in awe of the aesthetics of the tumblr and I can say all I want about how google maps does not have those design characteristics.

And, in doing so, I would be pretty much missing the point of why people would like either one of them. Thank you for this well-timed post. I am familiar with at least 9 source control systems, and I am paid-administrator-proficient with 4 of those, including ClearCase and Perforce. Git is such a mighty pain in the rear that I just gave up ever working on any open source project that uses it.

Its learning curve is a sucktastic cliff and you really do need to know almost everything about it before you can stop being dangerous. No thanks. The tool should not be more complicated and inconsistent than the programming language source it protects.

But two branches in the same repository and you do not want to consider the target branch as the current branch? Why would you even want to do that? If you know git internals pretty well, you could probably find a way to forge a merge commit in memory without checking them out.

Just� why? Linus never ever studied properly even having such learning grands like Tannebaum. But I fully agree that Mercurial achieves the same as git without introducing the same kind of complex interface. That does not make git a bad tool. It just makes it inferior � but heavily hyped. Even the first hate-thing is enough to not to read further for each one who really tried git.

Other 9 are also a crap of subjective shit, decorated with lovely graphics :. No more command line syntax to remember and it shows you a nice log on all the branches and past commits. Just read your post for the first time, complete with the August update. But even back then, decentralized version control was not some new field of research. Linus was indeed bold to write his own system from scratch, and its success speaks for itself � but part of the reason he was free to do that was that he was the only constituent he had to satisfy.

But even if we had seriously considered it, I think SVN would still be a centralized system today. The MediaWiki team has been going through a lot of pain, adopting Gerrit as a code review and Git management tool. I guess my feeling in all this is that Git or something equally powerful is necessary, but not sufficient, for effective code management in distributed teams. Besides the basic version control functions, intuitive and secure are the essential features of a version control tool.

If the tool cannot even manage the source code safely, then why use it. This new-fangled world with distributed workflows, and the new ideas that come with it are too much. In other words, more than half of the rant is a person used to wheelchair complaining about complexity of using wings.

The rant is only useful to Git maintainers really, just as a reminder that their user base is growing very diverse. Just choose Git. I have a feeling you approached git expecting it to be like svn and got disappointed. They are used for completely different workflows. They are suitable for completely different workflows.

Try managing Linux kernel with svn�. If you have a 10 developer project maybe git is overkill, but if your project grows enough then git is the best choice around.

Just a note about Github, many people use pull requests PR as part of their daily workflow. A pull request should be opened early, very early, before any real changes have been made.

The PR serves as a staging ground for discussions about the change, and a place for code review to occur. You can PR between two branches in the same repository. When I work on a project we all have push access to the same repository, but we still use PRs to coordinate changes. Comparing Subversion to git is like comparing a single-speed bicycle to a Jeep Wrangler or notepad to Microsoft Word.

But once you learn the basic theory you have a much more powerful tool at your disposal. This article is like hearing a whiny teenager complain about how hard his calculus class is because he thinks algebra should be enough math to get through a Computer Science degree. Unfortunately, it was actually created because Linus needed a tool. It was created for Linus by Linus, and then he decided maybe other people would like it too and let everyone use it.

And if they do, they certainly have no right to complain about it. I was thinking that, right up until I read the actual examples. But that someone in your project decides what subset of Git features make most sense to you. Your post has good points. From my perspective, git is awesome and I can live with the complexity of the commands because doing a lot of the things I do now easily with git were painful and dangerous with the previous version control systems. CVS was incredibly limited and I was constantly repairing checkouts by editing files in the.

CVS directories. Hmm, very interesting idea. That said, my impression is that people working to design new git user interfaces often have a rather limited view of what will want to be doing or what they should be wanting to do with git for example: git flow. It would be nice to have a tool that is really really simple for the simple things, yet also able to handle the more complex things when required, so it can be used by time-pressed non-programmers who would really prefer not to use version control at all and experienced developers with sophisticated needs alike.

Sensible defaults, easy installation, good documentation etc.. Unfortunately, I suspect that the tool would have to have absolutely no interface whatsoever to stop them being bamboozled. It is tempting to take an arrogant line and give up on these individuals, but I strongly disagree. There are highly intelligent domain specialists with limited time and little interest in software engineering issues, who nevertheless have a huge contribution to make to many projects.

Bringing their contributions under version control has a huge benefit in terms of communication, automation, organization, and team synchronization. Bottom Line: We need to make our tools simpler.

Not just a little bit simpler, but dramatically, radically, disappear-from-sight zero-interaction simple. Telling people that they are ignorant is not the solution, particularly when they represent valuable specialist expertise to the organization. I would rather take a more conciliatory approach and save up my balance of good-will for situations where it really matters Like the importance of keeping files neatly organized.

This is particularly true when the specialists in question view the software engineering function as something that is subordinate to them, and that should operate in the sole service of their objectives. It would really help me if the tools just got out of the way, and I did not have to apologize for them and the inconvenience that they cause. If I am using prepackaged software, and I am working with someone that does not want to deal with it, there are a variety of options open, including providing wrappers click on this icon� and engaging someone else to support them if this fails, talk to Sam , and so on�.

I agree with you that, amongst developers, learning new tools is rarely a significant issue � because we accept that as part of our job. I feel quite strongly that the same tools that we use to communicate, coordinate, synchronize and integrate work within an engineering team can also be used to fulfill the same needs across the entire organizational management structure, and that lack of systematic attention to such issues is the underlying reason for a great deal of inefficiency, waste and grief in a wide range of organizations.

I am an empiricist, and, as such, I think that empirical evidence is the most important, reliable source of truth in decision making. However, so many of our decision makers are so distant from the results of their decisions, and so removed from empirical truth, so distorted Upstream Downstream Problems Class 10 Free and disfigured by social and political pressures, that the quality of decisions in many organizations is simply execrable. I wish for that benefit, that feedback loop, to be extended more widely through the organization.

For people to cease hand-waving platitudes and generalizations, to get to grips with the detail of the problems that we face, and to realize that to make real progress, you need to do real work, not busywork. Moving the bulk of our source code from svn to git was probably the best thing we ever did in our company, but we set up a pretty significant process and automated-merge-to-master intranet page that tied in with our work tracking system.

We also left a bunch of third party stuff in svn because it just made more sense to not have every version of every binary on every clone of the git repo.

I originally got annoyed reading your rant until I realized it was pretty much a laundry list of everything we had to plumb around in getting widespread acceptance of a distributed source-control system in the company. Obviously we encourage GUIs like SmartGit, TortoiseGit and Git Extensions instead of the command line for everyone but the top end of power users, which paves over most of the usability complaints.

Yes, I wonder whether something git flow could be integrated into the core git suite. Those commands would also be able to give much more verbose output, and be free from the dual requirements of human usability and scriptability. All the others have safe defaults. You could easily, for example, use svn to track the history of a branch managed in git, and you could use git to propagate changes introduced in that branch using svn.

The thing is: they solve different problems: svn keeps track of a history of document instances, while git deals with change management. I suppose, though, some people are saying that they do not need both features. People that are using svn but not git do not need to coordinate changes from a group of contributors, and people that use git but not svn do not need another copy of the document history.

Do remember that SVN has real branches, though they are implemented via the file system. Too many states This has been mentioned a few times now, detatched head, merge conflicted, in-the-middle-of-that-but-got-stuck state etc. Do we need them all? This brings me on to: Like if something fucks up, talk me through the issue. This is carried through into GUIs for Git. Why not bring up a diff tool at that point? GUIs Ever try and learn about revision control? Read a blog about it? Get a book?

Have a buddy explain it? They ALL use diagrams at least the good ones do. Diagrams, AKA graphics. Why not use that for git, and combine it with the user interface, we could call it a GUI! Why not make a tool that covers the corner cases infact, not even the corner cases, just the edge cases, or the top-left-quadrant cases, or the non-svn-user case so you dont end up taking a user, confusing them, then sending them to the commandline. I suppose I am spoilt by this.

I still get stuck and confused, and my head still gets detached, and then you have to guess which reset command to use.

Some good points. Your point 13 is well made, too. Much of the complexity of Git revolves around trying to construct the right representation in your head, then take appropriate steps to resolve it. Perhaps there is one already, but it needs to be free. I played with tortoiseHG today without really reading the manual. I can commit, merge, rebase, revert and push to SVN entirely from the gui. I got in some messed up states, broke a few things, but even so, after initial set-up I never typed hg again and solved all isues through the GUI.

In fact, the only issues I really had were because i was doing operations against an SVN repository, which tortoiseHG has poor support for self admittedly. The best part was it provided a simple graph so i could see all the changes i was making as i made them. UI is waaayyy too hard.

Even using eGit in Eclipse is still complicated. And no, I am not dumb or lazy, I just need to make code and commit it. Back to SVN for me. I could intuitively make it work, even doing reasonably complicated things like rebasing, i managed to get it to work with a bit of playing around. I will agree with you on exactly 2 points: The git command line tools are inconsistent and suck. The git man pages need to be improved. Your other points either stem from this, or are simply wrong.

Complex information model? The only reason I know about them is because the man pages mention them for some reason. The way branches and tags etc work is exactly how I imagined they would before I was introduced to subversion. So your problems there are mostly to do with you familiarity with subversion I guess. I think the fact that you use github adds complexity that you are attributing to git. We have our own server where people can pull and push, so that eliminates several of your complaints already.

Naturally every other complaint when using the tool stems from these, because they describe the whole default user interface.

And the only way to avoid them is not using the tool directly, but using some program which uses the tool. You could say that you can improve every interface by not using it�. I hope git is not the final stop in the source control evolution. You can do it, but it has much higher friction than being able to use one language for one project. Arne Babenhauserheide : you can, but they have different paradigms so everytime you switch you have to change your way of doing things except if you use emacs.

Personally, I use a variety of languages, and that seems to me to be a very different experience from someone coming up to me and asking me to drop what I am doing and work on something else.

And since i use them all anyways its automatic for me when i use them. Thts y i like using all of them. I have no problem interacting with git via emacs, because it is exactly the same as interacting with Mercurial or SVN.

But an augmented GUI which works differently for the different systems is still a context switch which creates friction. Arne Babenhauserheide : rdm :. And everyone who pulled from you has to do the same. Seriously, the whole reason pulls are not automated is so that you can exercise judgement about which pulls to make, and when. Problems should be fixed by the person introducing them. That creates a shism in the project, and from there on it goes downward.

The problem is brought upon you by someone else, but git makes it easy for mainainers to screw with contributors and hard for contributors to recover.

Git makes it easy to rewrite published history you can even do so remotely! When you are using git, you need to be a code review process before you accept changes. If you define your policies properly, you might even be able to automate this process allowing anything that can be fast-forwarded, for example. That is still too short: Code review processes are exactly what creates the problem: Some code is not accepted, but someone already pulled it from another repo, then it is rebased, improved and accepted.

Suddenly you have two versions of mostly the same changes floating around, which everyone has to join by hand and change all the code they based upon that. A tool which makes it that easy to break lots of code of other people should have better ways to recover. But all you have is rebasing � which multiplies the effort when people share code very vividly. Arne Babenhauserheide : That is still too short: Code review processes are exactly what creates the problem: Some code is not accepted, but someone already pulled it from another repo, then it is rebased, improved and accepted.

And git offers nothing to recover from that condition. Arne Babenhauserheide : Rather it forces you to give your changes names which you will throw away. You are not forced to use names which you will throw away. For example, you could use your own name.

And you can keep using that same branch name for all of your changes, if that seems to be a good thing for you. Or you could use initials and the current date. Or you can create a file documenting the changes and you can use the same name for your branch that you used for the file. Or, you know, you could just stay on master and try to do all of your work there. That might not be the best idea, but it can be done. Same for time and anything else with which you try to replicate anonymous branching.

It has no information correllation to the repo I work in, so it is just useless effort forced on my by the lack of anonymous branching. Staying on master is hard for the same reason: lack of anonymous branching. Though that would be possible to fix by automatically creating a branch with a random name whenever someone commits on master.

So, if you are seriously talking about modifying git, why not introduce new commands that work the way you want them to work? Mercurial already does all that. And since the Mercurial devs discuss the interface in depth before adding anything, the number of unintuitive options is extremely low. So you are not actually trying to solve the problem of how to improve the UI. You are just finding reasons to object to it.

Just say what you like about hg and have fun with it�. I am forced to use git in some projects or rather: was forced till I switched to using hg-git , and I got bitten by it quite badly a few times. Due to that I think that promoting git over equally powerful but easier to use solutions is a problem for making free DVCS systems become the de-facto standard � with the goal of replacing all unfree versioning tools from VSS over Clearcase to TimeMachine. And those are the people who could really help free software.

Please read this with a grain of salt: Git is a great tool. I just think that it is not the best, despite its popularity, and that other tools would draw more people towards free software and DVCS � and even more importantly: alienate less people. In my mind the biggest problem is that I need to tell everyone I work with to use:. I own my local repository, and it can only include changes that I bring into it.

And if someone creates a problem for someone else, that person is responsible for fixing it. That last concept is worth repeating: If you are working with people that will not take responsibility for fixing the problems that they create, your life is going to be unpleasant.

Personally, I think that that problem should not be considered a technical problem, but a social problem. I do wish that git could be configured to never allow a local name for a branch to differ from the remote name for a branch.

But by restricting myself to the above commands and note that I never name a branch during push nor pull , I get close enough to that ideal for me to get my job done. I think we should ignore ignorant comments like that. But if they want to have a coherent discussion about the problems that they are encountering, and I have the time and interest, I might try seeing if I can talk with them on some sort of reasonable basis. If you manage to get people to restrict themselves to those commands, you should have a quite solid setup which people can enter nicely.

Is there any reason to not prune? If it exists in origin and not locally, why should I ever not want the branch from origin? But restricting checkout to getting branches is the best reason I ever saw for forcing people to use branches. Pull vs. You should only need pull, if you work on a remote branch, after all.

So cut pull and always merge remote branches explicitely. Though it would be nice to be able to branch it instead of having to copy your commands. Being able to show a diff between your commands and those I consider mostly sane :. In Linux kernel development you have clear hierarchies: There is Linus and there are his lieutenants. Some lieutenants release special builds of their domain. And you have groups working on a clearly limited area of the kernel, who rebase once their changes get accepted.

Now imagine this as a writing group I am a hobby author, so my VCS has to work for that task, too. Now the rewritten part gets accepted, but rebased, so history appears linear. You might have someone in charge of publishing the final book, but if the writers coordinate independently, he can either not rebase at all, or break their work repeatedly. As such git cements a hierarchy: it makes it less likely that the non-leaders build their own versions with changes from different groups, because doing so means that rebasing on part of the leader would create a big burden for all of them.

But git itself does not help you with that: It will happily break other peoples history, without even giving a warning. It does not track for you what has been published and thus should not be committed and if a given rebase would be safe. You have to keep all that in your mind � where you would normally rather keep more of the structure of your code.

So git makes it very easy for you to make mistakes, but really hard to fix them because other people have to fix your mistakes in their repositories. And that irritates me. Right� do not accept rebases.

Anyways, if someone sent me a rebase and I wanted the change, I might accept the changed content without accepting the history change. Drak : If you manage to get people to restrict themselves to those commands, you should have a quite solid setup which people can enter nicely.

But I think that these are already far too many commands. Still too much, but hard to get smaller. But well: It should. Being able to show a diff between your commands and those I consider mostly sane. I advise people not to use git branch with a branch name. When your team is productively generating several branches an hour, after a few months things can start slowing down.

So someone needs to delete the branches from the remote and fetch �prune copies those deletions locally. It does not have that right now, though, so I have to cope with this issue somehow. The workaround is: when you reboot your machine, run git gc in your active repository. I can easily imagine a new command set which is more technical, requiring explicit keywords to represent each of these three data structures.

Anyways: checkout without -b updates working copy. All of this is additive only. So this brings down everything from git and possibly chokes if something conflicting has happened. So far, the one time that that has happened, I went to the developer who caused the problem, talked to him about it, and he cleaned up the problem. But I forgot to mention git branch -a in my list of commands we use and perhaps in single remote mode it should do an implicit git fetch, if it can.

Anyways, not everyone uses github, and things that seem necessary when using github can be silly in other contexts. So, anyways, my thing has been to discover enough about how git works to use it, as is, sensibly.

So far those issues all have seemed to resolve around the idea that local branch names can be different from remote branch names. For fetch without �prune, I think it would be nice to have it prune those branches automatically which are neither checked out nor changed locally nor ancestors of local branches. That way you would likely never need the �prune, because all Upstream And Downstream Problems Class 10 Sp useless remote branches would disappear automatically and the others would stick. Additionally it has diverging-change detection, though: named branches can have multiple heads and diverging bookmarks automatically add a postfix to the remote bookmark.

When you pull the changes from him with Mercurial, you now get either the refactor branch with 2 heads for named branches or the bookmarks refactor and refactor mark.

If you use it with a shared repository, the bookmarks would instead be named refactor and refactor default, since the default remote repository is called default in mercurial. Arne Babenhauserheide : For fetch without �prune, I think it would be nice to have it prune those branches automatically which are neither checked out nor changed locally nor ancestors of local branches.

That could be annoying. In other words, it would assume that upstream was available and any remote branch would be assumed to exist locally � the local repository would be a cache of the remote. Pingback: git hates. Tags and reflog may be ignored. Web- Interfaces like cgit can produce one for an arbitrary hash if you do at one time need one just to eschew the revision control system.

People mentioned it: git fsck 9. Yes, history is the important product, because it can reduce the amount of WTFs. Or a system which works better for them. History can help, but good code goes first. And if you worry too much about the history, it can have a bad impact on your code. How best to learn Git? But, what about the conclusion? Are you certain about the supply?

Overall, I understand your frustrations with git. I probably once shared them, but as I get more and more used to git, I find myself bumping into the sharp edges less often.

What capricious use of random letters for commands! And that you have to know to choose checkout. That means, that my perception today is skewed, but for good reasons�. Your top ten is very fair IMHO. I guess your point 7 is my biggest concern. For a company to keep a central repo of their expensive IP, requires someone to babysit it so no idiot can rebase the history into oblivion.

This is a crazy waste of time and money. Hoping that someone has an untarnished clone of it on a PC somewhere that you can recover is not a mitigating strategy! Second is that CM should be as close to invisible as possible for the humble developer. A company pays them good money to develop IP. While they are doing that, your other n-1 developers will be sat there waiting patiently.. You always need someone who knows the internals of a SCM system, who can set up the branches and the integration strategy..

You could have a look at Mercurial. An example Workflow I wrote just requires the developers to commit and merge � and I can explain it in 3 steps:. Only maintainers touch stable. They always start at default since you do all the work on default.

More advanced developers can use feature branches and a host of other features, but no one has to. Yep you have to take a solid week out of your life and work sched to learn the monster. Get the concepts then use smartgit is my plan. Thanks for the post. While they are smart, they are not brilliant. From my time in the field it seems like a lot of people have forewent actually looking at the problem of VCS.

Most chose a centralized one and left the developers to fight with it. In my opinion, things like Eclipse cover up the giant gaping hole in centralized versioning systems. I think Linus saw the flaws in existing tools and truly tried to create one that would help everyone.

It IS complex, but that complexity comes with great flexibility. I am able to use Git to produce just about any imaginable workflow. Local branching support and stash are the bar for how much git will be of use to you. There are people who would rather work surrounded by a local VCS bubble in which they can make their changes without being affected by the unwashed masses.

I find commits important. I find what I commit on what branch to be important. I want to keep around experimental changes locally in case I need to use them again. Git is a replacement for not only a centralized version control system, but also a replacement for Eclipse local history. It functions in both ways and allows you to work independently of what you commit, allowing you to push what you want when you want where you want.

I have not seen another VCS even come close when you look at the ideas it abstracts. But is VI not difficult to learn at first? Was bash a walk in the park? I find git better than the alternatives because after I learned the basics of what I was actually trying to do, I can stack overflow a way to get there.

People who like to get shit done without the tooling getting in their way. I cannot tell you how many times you wind up resorting to making a copy of your whole workspace just so you can get SVN or CVS out of some retard state in Eclipse and then had to pull diffs back in.

I have never done so with Git�because I never had the need to. Let it help you. Mercurial showed me that it is possible to have all that flexibility without the complexity of git. The decentralized model gives a lot of flexibility and I would not want to work without that.

But git makes it pretty hard for normal developers to harness the power of the model while Mercurial makes it really easy, giving all the safety and convenience without the pain of git. So I disagree with your sentence. It implies that the complexity of git is required to get the flexibility of the decentralized model. And that is not true. SVN is, indeed, very simple to understand.

I know training guys from my company, most people non-technical are able to work with it just fine after one hour of basic training. And merging is merging, the git heads often pretend to save a lot of time on merging with their great system while in fact most time is spent on resolving the conflicts in actual contents. And a VCS can hardly help you there. Did you read the same article above as I did?

Pingback: Git is awful Smash Company. Git is like a ferrari covered in fresh dog turd. Overall equipment effectiveness OEE is defined as the product between system availability, cycle time efficiency and quality rate. OEE is typically used as key performance indicator KPI in conjunction with the lean manufacturing approach. Designing the configuration of production systems involves both technological and organizational variables. Choices in production technology involve: dimensioning capacity , fractioning capacity, capacity location, outsourcing processes, process technology, automation of operations, trade-off between volume and variety see Hayes-Wheelwright matrix.

Choices in the organizational area involve: defining worker skills and responsibilities , team coordination, worker incentives and information flow. Regarding production planning , there is a basic distinction between the push approach and the pull approach, with the later including the singular approach of just in time. Pull means that the production system authorizes production based on inventory level; push means that production occurs based on demand forecasted or present, that is purchase orders.

An individual production system can be both push and pull; for example activities before the CODP may work under a pull system, while activities after the CODP may work under a push system. Regarding the traditional pull approach to inventory control , a number of techniques have been developed based on the work of Ford W.

Harris [17] , which came to be known as the economic order quantity EOQ model. This model marks the beginning of inventory theory , which includes the Wagner-Within procedure , the newsvendor model , base stock model and the Fixed Time Period model. These models usually involve the calculation of cycle stocks and buffer stocks , the latter usually modeled as a function of demand variability. The economic production quantity [42] EPQ differs from the EOQ model only in that it assumes a constant fill rate for the part being produced, instead of the instantaneous refilling of the EOQ model.

Joseph Orlickly and others at IBM developed a push approach to inventory control and production planning, now known as material requirements planning MRP , which takes as input both the master production schedule MPS and the bill of materials BOM and gives as output a schedule for the materials components needed in the production process. MRP therefore is a planning tool to manage purchase orders and production orders also called jobs. The MPS can be seen as a kind of aggregate planning for production coming in two fundamentally opposing varieties: plans which try to chase demand and level plans which try to keep uniform capacity utilization.

Many models have been proposed to solve MPS problems:. MRP can be briefly described as a 3s procedure: sum different orders , split in lots , shift in time according to item lead time.

To avoid an "explosion" of data processing in MRP number of BOMs required in input planning bills such as family bills or super bills can be useful since they allow a rationalization of input data into common codes. MRP had some notorious problems such as infinite capacity and fixed lead times , which influenced successive modifications of the original software architecture in the form of MRP II , enterprise resource planning ERP and advanced planning and scheduling APS.

In this context problems of scheduling sequencing of production , loading tools to use , part type selection parts to work on and applications of operations research have a significant role to play. Lean manufacturing is an approach to production which arose in Toyota between the end of World War II and the seventies. It comes mainly from the ideas of Taiichi Ohno and Toyoda Sakichi which are centered on the complementary notions of just in time and autonomation jidoka , all aimed at reducing waste usually applied in PDCA style.

Some additional elements are also fundamental: [43] production smoothing Heijunka , capacity buffers, setup reduction, cross-training and plant layout.

A series of tools have been developed mainly with the objective of replicating Toyota success: a very common implementation involves small cards known as kanbans ; these also come in some varieties: reorder kanbans, alarm kanbans, triangular kanbans, etc.

In the classic kanban procedure with one card:. Since the number of kanbans in the production system is set by managers as a constant number, the kanban procedure works as WIP controlling device, which for a given arrival rate, per Little's law , works as a lead time controlling device. In Toyota the TPS represented more of a philosophy of production than a set of specific lean tools, the latter would include:. Seen more broadly, JIT can include methods such as: product standardization and modularity , group technology , total productive maintenance , job enlargement , job enrichment , flat organization and vendor rating JIT production is very sensitive to replenishment conditions.

In heavily automated production systems production planning and information gathering may be executed via the control system , attention should be paid however to avoid problems such as deadlocks , as these can lead to productivity losses.

Project Production Management PPM applies the concepts of operations management to the execution of delivery of capital projects by viewing the sequence of activities in a project as a production system. Service industries are a major part of economic activity and employment in all industrialized countries comprising 80 percent of employment and GDP in the U.

Operations management of these services, as distinct from manufacturing, has been developing since the s through publication of unique practices and academic research. According to Fitzsimmons, Fitzsimmons and Bordoloi differences between manufactured goods and services are as follows: [47]. These four comparisons indicate how management of service operations are quite different from manufacturing regarding such issues as capacity requirements highly variable , quality assurance hard to quantify , location of facilities dispersed , and interaction with the customer during delivery of the service product and process design.

While there are differences there are also many similarities. For example, quality management approaches used in manufacturing such as the Baldrige Award, and Six Sigma have been widely applied to services.

Likewise, lean service principles and practices have also been applied in service operations. The important difference being the customer is in the system while the service is being provided and needs to be considered when applying these practices. One important difference is service recovery. When an error occurs in service delivery, the recovery must be delivered on the spot by the service provider. If a waiter in a restaurant spills soup on the customer's lap, then the recovery could include a free meal and a promise of free dry cleaning.

Another difference is in planning capacity. Since the product cannot be stored, the service facility must be managed to peak demand which requires more flexibility than manufacturing. Location of facilities must be near the customers and scale economics can be lacking. Scheduling must consider the customer can be waiting in line.

Queuing theory has been devised to assist in design of service facilities waiting lines. Revenue management is important for service operations, since empty seats on an airplane are lost revenue when the plane departs and cannot be stored for future use. There are also fields of mathematical theory which have found applications in the field of operations management such as operations research : mainly mathematical optimization problems and queue theory.

Queue theory is employed in modelling queue and processing times in production systems while mathematical optimization draws heavily from multivariate calculus and linear algebra.

Queue theory is based on Markov chains and stochastic processes. When analytical models are not enough, managers may resort to using simulation. Simulation has been traditionally done through the discrete event simulation paradigm, where the simulation model possesses a state which can only change when a discrete event happens, which consists of a clock and list of events.

The more recent transaction-level modeling paradigm consists of a set of resources and a set of transactions: transactions move through a network of resources nodes according to a code, called a process. Since real production processes are always affected by disturbances in both inputs and outputs, many companies implement some form of quality management or quality control. The Seven Basic Tools of Quality designation provides a summary of commonly used tools:.

These are used in approaches like total quality management and Six Sigma. Keeping quality under control is relevant to both increasing customer satisfaction and reducing processing waste. Operations management textbooks usually cover demand forecasting , even though it is not strictly speaking an operations problem, because demand is related to some production systems variables.

For example, a classic approach in dimensioning safety stocks requires calculating the standard deviation of forecast errors. Also, any serious discussion of capacity planning involves adjusting company outputs with market demands.

Other important management problems involve maintenance policies [52] see also reliability engineering and maintenance philosophy , safety management systems see also safety engineering and Risk management , facility management and supply chain integration. The following high-ranked [53] academic journals are concerned with operations management issues:. From Wikipedia, the free encyclopedia. Business administration Management of a business Accounting. Management accounting Financial accounting Financial audit.

Business entities. Corporate group Conglomerate company Holding company Cooperative Corporation Joint-stock company Limited liability company Partnership Privately held company Sole proprietorship State-owned enterprise. Corporate governance. Annual general meeting Board of directors Supervisory board Advisory board Audit committee. Corporate law. Commercial law Constitutional documents Contract Corporate crime Corporate liability Insolvency law International trade law Mergers and acquisitions.

Corporate title. Commodity Public economics Labour economics Development economics International economics Mixed economy Planned economy Econometrics Environmental economics Open economy Market economy Knowledge economy Microeconomics Macroeconomics Economic development Economic statistics.

Types of management. Business analysis Business ethics Business plan Business judgment rule Consumer behaviour Business operations International business Business model International trade Business process Business statistics. See also: Industrial Revolution and Productivity improving technologies historical.

Main article: Operations management for services. Retrieved Chase , F. Jacobs, N. Operations Management: Processes and Supply Chains. ISBN Wren and A. The coming of the post-industrial society: a venture in social forecasting. New York: Basic Books. The Principles of Scientific Management. LCCN OCLC Also available from Project Gutenberg. American Society of Mechanical Engineers. Operations Research. JSTOR Retrieved Nov 21, Van Nostrand Company. ISBN edition 1st.

LCC TS Maynard, J. Schwab, G. Harvard Business Review. McDonald's: Behind the Arches. New York: Bantam. FedEx Delivers. New York: Wiley. Wal-Mar Effect. New York: Penquin Books. Hammer , J. Portioli, A.

Wortmann , Chapter: "A classification scheme for master production schedule", in Efficiency of Manufacturing Systems, C. Berg, D. French and B. Hill, Manufacturing Strategy-Text and Cases , 3rd ed. Hopp, M. Spearman, Factory Physics , 3rd ed.

Pound, J. Bell, and M. Shenoy and T. Archived from the original on Service Management: Operations, Strategy and Technology. Operations Management. Upper Saddle River, N. London, England: Pearson. Katehakis Derman Archived from the original PDF on CS1 maint: archived copy as title link. Outline of business management Index of management articles.

Accounting Office Records. Conflict Crisis Stress. Interim Middle Senior. Decision-making Forecasting Leadership. Peter Drucker Eliyahu M. Goldratt Oliver E. Administration Collaboration Corporate governance Executive compensation Management consulting Management control Management cybernetics Management development Management fad Management system Managerial economics Managerial psychology Managerialism Organization development Organizational behavior management Pointy-haired Boss Williamson's model of managerial discretion.

Systems science portal. Business and economics portal. Categories : Business terms Manufacturing Management by type Production economics Supply chain management. Hidden categories: Webarchive template wayback links CS1 maint: multiple names: authors list CS1 maint: location CS1 maint: archived copy as title. Namespaces Article Talk. Views Read Edit View history.

Help Learn to edit Community portal Recent changes Upload file. Download as PDF Printable version. Wikimedia Commons Wikiquote. Management of a business.

|

Small Aluminium Boats For Sale 803 Yacht Day Trip Mallorca 600 |

19.01.2021 at 20:10:29 The cookies is used to store this is also the.

19.01.2021 at 12:57:20 Are designed for line and the line of action of the back downstream.

19.01.2021 at 19:50:32 Built: , cabins: 5 Engine: MTU 12V M93, 2 x 3, hp (2, kW), diesel � 11, really not bolts?too low.

19.01.2021 at 20:30:48 Text are for items listed in currency other than the Source get FREE.

19.01.2021 at 13:26:40 ������� �� ����������, ����� ���� ���������.